Hi Chris.

Chris Mierzwinski wrote:Wondering if there is a workflow or built in tools in Davinci to do that. If not any suggestions of other programs that can.

There is no built-in workflow for automated multi-camera mono/stereo 3D panoramic 360° video stitching in the current Resolve 15 Beta 5 release.

In the Interim, you might take a look at using

MistikaVR to do optical flow based stitching of your 360° camera rig footage. Then you can bring the final stitched LatLong formatted media into Resolve for color correction and editing.

If you are using a GoPro 360° camera you can use the confusingly similarly named

GoPro Fusion Studio suite of tools to pre-stitch your GoPro footage before you bring the media into Resolve 15 for editing. (Someone's trademark lawyer must have been asleep at the wheel for the brand name of GoPro Fusion Studio to be allowed...)

* * *

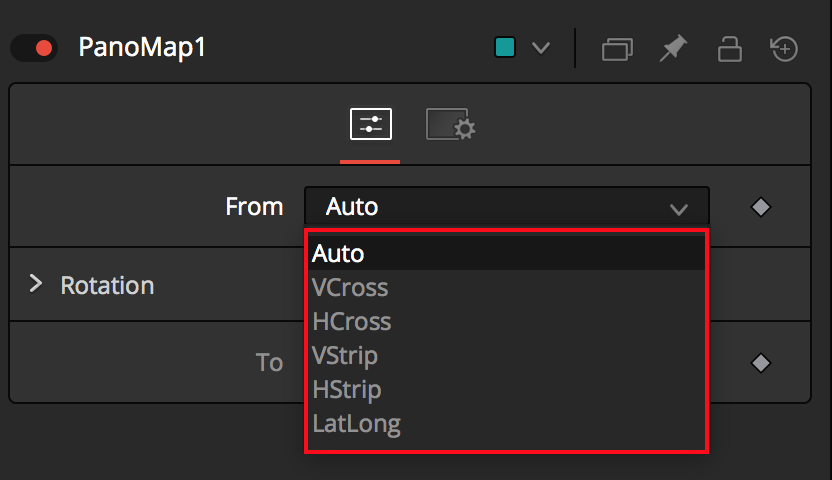

The Resolve Studio 15 Fusion page VR tools lack an easy to use option in the

PanoMap node for doing parametric circular fisheye/domemaster to LatLong based image projection conversions.

- PanoMap Node

- PanoMap.png (46.49 KiB) Viewed 3852 times

The current VR tools in the Fusion page are missing a lot of essential options for dealing with stereo 360° footage when you want to generate stereo disparity data, or do side-by-side or over-under stereo 3D based 360° image projection conversions.

The Fusion 3D system spherical camera in Resolve 15 does not output seamless omni-directional stereo media either.

Also, it is hard to apply the standard Panotools style A/B/C lens distortion corrections (that are common in 360° stitching tools) to your footage in an editable and resolution independent fashion since the Fusion page's

LensDistort node hasn't been updated in a really long time — by my guess it was last touched in the Fusion ~7 Standalone era. Ideally the current lens distortion models that are popular in 2018 should be added to the LensDistort node.

- LensDistort Node

- LensDistort.png (125.79 KiB) Viewed 3852 times

* * *

From the perspective of 3rd parties making scripted tools, fuses, or plugins that would import, manage, and work with multi-camera footage, the MediaIn node + Resolve Scripting API is a bit of a nightmare compared to using Loader/Saver nodes in Fusion Standalone. This is especially true if you want to import a series of still images into the Fusion page.

In the current Resolve 15 beta 5 release, the MediaOut node is still very end-user hostile as far as trying to do end to end 360VR post-production workflows entirely inside of Resolve. For example, you can only save a single movie/image sequence output from a Fusion page composite. This makes it hard to bake multiple parts of a large node tree to disk as multi-channel content and then load that pre-computed/pre-rendered footage back in.

Also, the Resolve 15 Deliver page only allows RGBA based output so your stereo 360° based stitching data like disparity maps and optical flow motion vector channel data is not able to be exported to disk as EXR media using a MediaOut node.

(

Note: The Steakunderwater fourm's Reactor toolset developers are working on adding new tools to the Reactor package manager that should make it easier for compositors to work with multi-part and multi-channel EXR footage in both Resolve and Fusion Standalone. At this point the first release of these new EXR tools is expected to come out roughly around the time of SIGGRAPH 2018.)

* * *

The fact a Resolve Fusion page composite has to be attached to a pre-existing Resolve timeline makes it harder to use the Fusion page as a dedicated tool for processing and stitching 360° video. Well, harder atleast when Resolve 15 is compared to what is possible in a fully loaded copy of Fusion Studio 9 (Standalone) that is capable of having all the extra 3rd party Fusion plugins and other addons loaded into it.

I'm looking forward to Resolve 15 adding support for the Fusion SDK. This would allow tools like Chad Capeland's excellent

CustomShader3D toolset to be updated to work in Resolve. And the Fusion SDK would allow for Krokodove to be ported to Resolve, too.

* * *

It would be nice to see Resolve get into the 360VR space in a serious way with more dedicated tools and improved workflows. I'd like to think the Resolve product managers would *think big* and have a vision for the future that includes more support for immersive media.

Chris Mierzwinski wrote:Has anyone used Davinci Resolve to stitch multiple videos in a single panorama?

Yes. I did some experiments in panoramic video stitching of Nokia Ozo footage early on in the Resolve beta cycle using my own custom in-house tools (scripts, fuses, macros). With the help of extra tools it was possible to do the multi-camera stitching in Resolve but TBH it was a lot of work compared to using a dedicated package.

Nokia Ozo+ 360° Video Stitching in Resolve 15This video shows the equirectangular output from stitching Nokia Ozo+ 8 camera 360VR footage in the Resolve 15 Beta. The stitching was done back in March 2018 using a special R&D version of the (now discontinued) KartaVR macros running in an early beta version of Resolve 15's Fusion page.