Jim is correct about testing certain scene types. That's why researchers use a collection of scene types representing a wide variety of encoder challenges:

Xiph encoding test material:

https://media.xiph.org/video/derf/UVG Dataset:

https://ultravideo.fi/dataset.htmlWhitepaper on UVG Dataset:

https://web.archive.org/web/20240619113 ... _ready.pdfYou don't want to just eyeball differences. It's best to use objective image evaluation tools, then confirm it with your eyes.

Most of these tools require a reference video that must match frame-by-frame to the higher compression file. For a camera file test, that really requires simultaneous recording using two different codecs. I've done that many times using an Atomos Ninja V or Shogun 7 recording ProRes 422, then comparing it to the simultaneously recorded in-camera codec.

However, if the question is image quality of a Resolve-encoded file vs the original file, that only requires a single original file since you are encoding the higher compression file from the original.

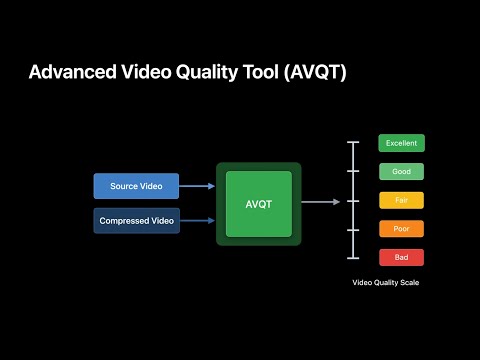

On MacOS, Apple provides a free command-line tool called AVQT. It is designed to measure perceptual quality loss, not just a technical parameter. It can be downloaded from the Apple Developer site but that requires you create a free developer account (click "Account" at top-right corner of this page:

https://developer.apple.com/.)

WWDC21 talk on AVQT:

https://developer.apple.com/wwdc21/10145Youtube tutorial on AVQT:

You can also measure visual generation loss using a tool developed by NetFlix called VMAF (Video Multi-Method Assessment Fusion). Unlike AVQT, it is available on both Mac and Windows. VMAF is built into certain versions of ffmpeg. On Mac, ffmpeg-VMAF is available through the Homebrew package manager:

https://brew.shOn Windows, pre-built ffmpeg-VMAF binaries are available here:

https://www.gyan.dev/ffmpeg/builds/There is a VMAF web tool, but it only works with 1080p and has other limits. The command-line version is much more capable:

https://vmaf.dev