Over Docker wrote:... blackmagic says that BRAW eliminates noise in compression... What Sony does, let's say using h264/5...It turns out that now the iPhone also eliminates noise in compression (ProRes/h264/h265)....

I'm not sure what that means—all cameras and all formats have noise. If you mean compression artifacts, there could be tiny differences, but these will not be noticed by the final viewer after post-production and distribution and under normal viewing conditions.

Over Docker wrote:....It is fantastic that the iPhone can eliminate noise in compression, although details are lost, in many cases this is a great advantage...

Again, I'm not sure what that means. I've shot multi-cam interviews with dual iPhone 15 Pro Maxs using a variety of codecs from the Blackmagic Camera App, iOS camera app and Filmic Pro. If you understand composition and lighting, you can make it look pretty good. It's not equal to a pro camera or even an A7SIII, but it can be fairly good.

The problem is that requires knowledge, skill, preparation and practice, just like any camera.

Over Docker wrote:....with this in mind, iPhone with ProRes is not very different from BRAW... You want something light and you use 422LT, you want more information you take the ProRes XQ 4444.

I think you are over-thinking this. The main factors are lighting, composition, and understanding the limitations of the given camera system. If you have that knowledge, an iPhone 15 Pro Max can shoot decent interview video. With all other factors held constant, the difference between various ProRes flavors and BRAW can be relatively small. You would rarely shoot ProRes 4444XQ due to the extreme data size. It is very burdensome, especially at > 4k resolutions.

Except for alpha channel transparency and possible bit depth, there is very little difference in acquisition image quality or editing image quality if comparing ProRes 422 vs ProRes 4444XQ.

The main differences are the 4444 codec better supports 12-bit data (if available) and the higher bitrate variants better sustain generation loss if doing multiple sequential re-encodes. That implies a hand-off each time. By contrast an NLE timeline can be rendered 1000 times during the edit, and that does not degrade quality. The edits are recorded as metadata in the project file or library. Each render reads the original files, not files containing multi-generation losses.

If anyone is curious about generation loss or encoding quality loss, it is straightforward to objectively measure those using some free command-line tools.

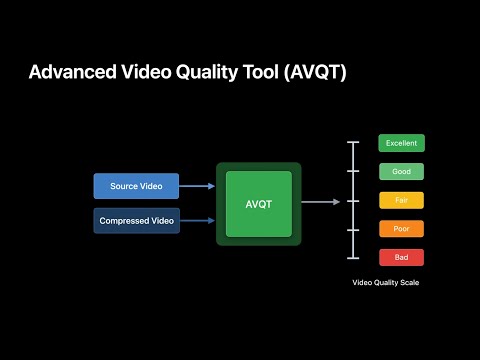

On MacOS, Apple provides AVQT. It is designed to measure perceptual quality loss, not just technical parameters. It can be downloaded from the Apple Developer site but that requires you create a free developer account (click "Account" at top-right corner of this page:

https://developer.apple.com/.)

WWDC21 talk on AVQT:

https://developer.apple.com/wwdc21/10145Youtube tutorial on AVQT:

You can also measure visual generation loss using a tool developed by NetFlix called VMAF (Video Multi-Method Assessment Fusion). Unlike AVQT, it is available on both Mac and Windows. VMAF is built into certain versions of ffmpeg. On Mac, ffmpeg-VMAF is available through the Homebrew package manager:

https://brew.shOn Windows, pre-built ffmpeg-VMAF binaries are available here:

https://www.gyan.dev/ffmpeg/builds/